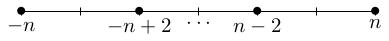

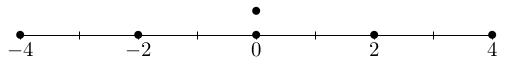

Any irrep of is isomorphic to for some and this has weight diagram:

We have now established the following theorem.

Any irrep of is isomorphic to for some and this has weight diagram:

There is also a complete reducibility theorem:

Any finite-dimensional representation splits as a direct sum of irreps.

This is proved in exactly the same way as for representations of once you understand how to integrate (average) over the group . Instead of talking about that, I will work through some examples, decomposing representations into irreducibles.

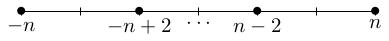

Consider the standard representation tensored with itself: . This will turn out to have the following weight diagram:

In other words, there are 1-dimensional weight spaces with weights and and a 2-dimensional weight space in weight 0.

Inside this diagram, you can see the weight diagram of as a subdiagram. When you remove this subdiagram what's left is the weight diagram of the trivial 1-dimensional representation.

This will allow us to deduce that .

A basis for is given by (where is the standard basis for . We have Because lives in the Lie algebra, we evaluate using the product rule:

Therefore is an eigenvector for with eigenvalue , so it lives in the weight space . Similarly we get which give us the 2-dimensional weight space and which gives the weight space . So our weight spaces are:

Following the procedure we used in proving the classification theorem, we pick a highest weight vector and use to generate a subrepresentation spanned by: These three vectors live in the weight spaces , and respectively, and give a subrepresentation isomorphic to (because it has the right weight diagram).

There is an invariant Hermitian inner product on the representation, and we take the orthogonal complement to get a complementary subrepresentation. Since this complement is 1-dimensional, it must be isomorphic to the trivial 1-dimensional representation (the only irrep of this dimension).

We deduce that . This should not be a surprise, because was defined to be a subrepresentation of , in fact it was defined to be the subrepresentation spanned by the symmetric tensors , and .

Let's check that this is what we are getting. Since we have and . If then and so we get precisely that on the nose.

The complementary subrepresentation turns out to be spanned by the antisymmetric tensor , which spans the exterior square . It's an exercise to check that this antisymmetric tensor does span a subrepresentation (i.e. it is fixed by the action of ).

Let's figure out the decomposition of . We know that is spanned by , and . In the same way we can think of as quadratic polynomials in , we can think of as quadratic polynomials in , , and . It therefore has a basis

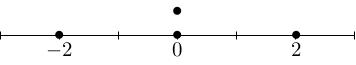

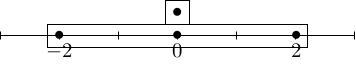

Let's calculate the weight space decomposition. We know that , and , so (using the product rule) we have The weight space decomposition is therefore: with weight diagram

From this you can see that The argument is exactly as before: you pick a highest weight vector like and you generate a highest weight subrepresentation spanned by , which is isomorphic to by the classification theorem. Then you take the orthogonal complement of with respect to an invariant Hermitian inner product and you get a complementary subrepresentation whose weight diagram is obtained from the weight diagram of by stripping off the weight diagram of .

The trivial complementary subrepresentation has to be spanned by some combination of and as these span the 0-weight space. In this case, I claim that the trivial complementary subrepresentation is spanned by (it will be an exercise to check this).

Compute the weight diagrams and decomposition of for a few different values of and and see if you can formulate a conjecture as to what the answer should be in general.