A sequence M_k of matrices converges to a matrix M if, for all positive epsilon, there exists an N such that norm of (M_k minus M) is strictly less than epsilon whenever k is bigger than or equal to N.

Optional: Matrix norms

Matrix norms

We defined exp of M equals sum from n = 0 to infinity of one over n factorial times M to the n, M to the zero equals the identity, and claimed that it converges. What does it mean for a sequence of matrices to converge?

Here, norm denotes a matrix norm, i.e. a way of measuring how "big" a matrix is.

A

-

(triangle inequality) norm of M_1 plus M_2 is less than or equal to norm M_1 plus norm M_2 for all M_1,M_2 in little g l n R;

-

norm of lambda M equals the absolute value of lambda times the norm of M for all M in little g l n R and lambda in R;

-

norm of M equals zero if and only if M = 0.

We will focus on two particular matrix norms.

L1 norm

The L 1-norm of M, is L 1 norm of M equals the sum of absolute values of all matrix entries. So a matrix is "big in the L^1 norm" if it has an entry with large absolute value.

If L 1 norm of M then M_(ij) for all i,j, so M = 0. If we rescale M by lambda then all entries are scaled by lambda, so L 1 norm of M equals the absolute value of lambda times the L 1 norm of M. The triangle inequality can be deduced by applying the triangle inequality for absolute values to each matrix entry.

Operator norm

The

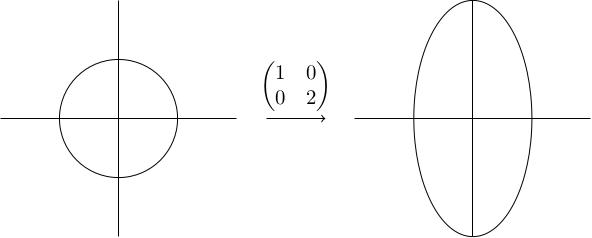

Another way to think of this is: you take the unit sphere in R n, you apply M to obtain an ellipsoid, and you take the furthest distance of a point on this ellipsoid from the origin.

Take M to be the 2-by-2 matrix 1, 0, 0, 2. The x-axis is fixed and the y-axis is rescaled by a factor of 2. The unit circle therefore becomes an ellipse with height 2 and width 1. The furthest point from the origin is a distance 2 from the origin (either the north or south pole), so operator norm of M equals 2.

To see that the operator norm is a norm, note that:

-

If operator norm of M then all points on the ellipsoid image of the unit sphere are a distance 0 from the origin, so the image of the unit sphere is the origin. Therefore M = 0.

-

If you rescale M by lambda then the lengths of the vectors M u over which we are taking the maximum are rescaled by the absolute value of lambda, so operator norm of lambda M equals the absolute value of lambda times the operator norm of M.

-

To prove the triangle inequality, note that length of ((M_1 plus M_2) applied to u) equals the length of (M_1 u plus M_2 u), which is less than or equal to the length of M_1 u plus the length of M_2 u, which is less than or equal to the operator norm of M_1 plus the operator norm of M_2. Since the operator norm of M_1 plus M_2 equals the maximum of the length of (M_1 plus M_2) applied to u where u runs over unit vectors, this shows operator norm of M_1 plus M_2 is less than or equal to the operator norm of M_1 plus the operator norm of M_2.

Lipschitz equivalence

Any two matrix norms on little g l n R are Lipschitz equivalent. For the two norms we've met so far, this means there exist constants C_1, C_2, D_1, D_2 (independent of M) such that: C_1 times the L 1 norm of M is less than or equal to the operator norm of M, which is in turn less than C_2 times the L 1 norm of M and D_1 times the operator norm of M is less than or equal to the L 1 norm of M, which is in turn less than or equal to D_2 times the operator norm of M

These inequalities will be useful in the proof of convergence: sometimes it's easier to bound one or other, and this is telling us that if you can bound one then you can bound the other. It also tells us that the notion of convergence we defined (where we had implicitly picked a matrix norm) doesn't depend on which norm we picked.

We won't prove the lemma, but for those who are interested, it's true more generally that any two norms on a finite-dimensional vector space are Lipschitz equivalent. This fails for infinite-dimensional vector spaces, but we're working with little g l n R which is n squared-dimensional.

Properties of the operator norm

We will now prove some useful properties of the operator norm. Since we're only focusing on this norm, we will drop the subscript op and write it as norm M.

-

For any vector v in R n, length of M v is less than or equal to norm M times the length of v.

-

norm of the product (M_1 M_2) is less than or equal to norm M_1 times norm M_2.

-

norm of (M to the p) is less than or equal to (norm of M) to the p.

-

Write v as u times the length of v for some unit vector u. Then length of M v equals the length of v times the length of M u, which is less than or equal to norm M times the length of v because the length of (M u) is less than or equal to norm M by definition of the operator norm.

-

Let u be a unit vector. We have the length of M_1 M_2 u is less than or equal to norm M_1 times the length of M_2 u, which is less than or equal to norm M_1 times norm M_2 times length of u, which equals norm M_1 times norm M_2. using the first part of the lemma twice. This shows that the things we are maximising over to get norm of the product (M_1 times M_2) are all less than norm M_1 times norm M_2, so norm of the product M_1 times M_2 is less than or equal to norm M_1 times norm M_2.

-

Lastly, norm of (M to the p) is less than or equal to norm of (M to the p minus one) times norm M, which is less than or equal to dot dot dot (norm of M) to the p using the previous part of the lemma inductively.