The determinant gives us a homomorphism , by sending a matrix to the 1-by-1 matrix . This is a homomorphism because and .

Smooth homomorphisms

Homomorphisms

Recap

Recall that a homomorphism is a map between two groups such that for all and . In other words, is a map which intertwines the group structures on and on . Homomorphisms play a key role in group theory, and we will focus on the case when and are matrix groups.

Smoothness

In this course we are trying to use tools from calculus to study groups and homomorphisms, so we would like to be able to differentiate . You know how to differentiate a function. You know how to differentiate a map from to by taking partial derivatives of components. But how can we differentiate a map between two matrix groups?

A homomorphism

of matrix group is

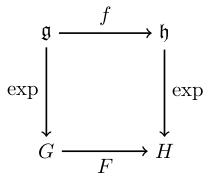

We have exponential maps and which (as was proved in this optional video) are locally invertible. When we view a map in local exponential charts, it means that we get a (locally-defined) map which makes this diagram commute:

In other words, wherever that makes sense, i.e. on some neighbourhood of the zero-matrix in . This is a map between open subsets of vector spaces and so it is infinitely-differentiable if all possible iterated partial derivatives of all components exist.

Note that for all in the neighbourhood where is defined. You can see this by taking on both sides of .

Main result

If is a smooth homomorphism of matrix groups then:

-

is the restriction of a linear map to a neighbourhood of the zero-matrix.

-

for all .

-

for all .

We'll prove this theorem in a later video, but let us remark that this third result is not unexpected: we obtain by "taking inside the exponential", and the Baker-Campbell-Hausdorff formula told us that the group product is determined by , and iterated brackets between them, so if preserves group multiplication, it's not surprising that preserves the bracket.

Example

Since is a homomorphism, this theorem tells us that there is a linear map such that for all matrices . It turns out that . So

Why is it true that ? By the theorem, is linear, so it suffices to check on a basis for .

Let's use the basis of matrices whose entries are all zero except for one which is 1: First suppose that we pick a basis element with a 1 on the diagonal, like . Then as required.

Pre-class exercise

If has a 1 on the off-diagonal, I'll leave it as an exercise to check that .