Given a matrix group with Lie algebra , the adjoint representation is the homomorphism defined by In other words:

-

the vector space on which we're acting is ,

-

is a linear map .

Before we classify the representations of , I want to introduce a representation which makes sense for any Lie group, the adjoint representation, and study it for .

Given a matrix group with Lie algebra , the adjoint representation is the homomorphism defined by In other words:

the vector space on which we're acting is ,

is a linear map .

It is an exercise to check that this is a representation, but I will explain why it is well-defined, in other words why is when and .

If and then .

We need to show that for all , . As a power series, this is:

All of the s sandwiched between the s cancel and we get

Since , and for all , we see that this product is in for all , which proves the lemma.

The induced map on Lie algebras is . (I apologise for the profusion of g's playing different notational roles).

Let's calculate for some . This is a linear map , so let's apply it to some : (This is how we calculate for any representation : it follows by differentiating with respect to ). This gives:

In other words, Note that this makes sense even without reference to .

Since we know already that it's a representation of Lie algebras, but it's possible to prove it directly from the axioms of a Lie algebra without reference to the group i.e. that for all . Do this!

Recall that we have a basis for . Let's compute with respect to this basis.

sends:

to ,

to ,

to ,

so with respect to this basis.

In fact, the action of on a representation tells us the weights, so we see that the weights of the adjoint representation are . In particular, the adjoint representation is isomorphic to .

It's an exercise to compute and .

Let's find a basis of . Define to be the matrix with zeros everywhere except in position where there is a 1, e.g. There are 6 such matrices with . Together with this gives us a basis of ; in other words, any tracefree complex matrix can be written as a complex linear combination of these 8 (it's an 8-dimensional Lie algebra).

More generally, we will write where is a vector satisfying I want to compute .

We have because the -matrices are all diagonal (and hence all commute with one another). This means that and are contained in the zero-weight space of the adjoint representation. This is because , so the eigenvalues of tell us the weights of the representation.

It turns out that . For example:

Let's figure out the weights of the adjoint representation. If then we have , so . For example, satisies , so .

Similarly, we get , so .

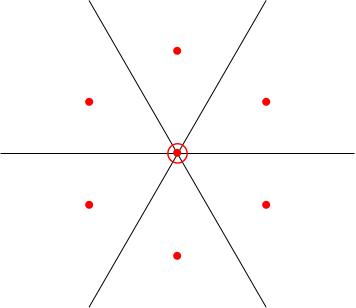

The other weight space that occur are: and the weight diagram is the hexagon shown in the figure below.

Note that the zero-weight space is spanned by and , which means it's 2-dimensional. We've denoted this by putting a circle around the dot at the origin in the weight diagram.

The weight space decomposition of the adjoint representation is sufficiently important to warrant its own name: it's called the root space decomposition. The weights that occur are called roots and the weight diagram is called a root diagram.

The matrices inhabit the following weight spaces: and the weight diagram is the hexagon shown above.